4,746 views

BlackHat EU 2012 – Day 1

BlackHat EU 2012 – Day 1

Introduction – Back in Amsterdam !

After a 2 year detour in Barcelona, BlackHat Europe has returned to Amsterdam again this year.

After spending a few hours on the train, checking in at The Grand Hotel Krasnapolsky, getting my ‘media’ badge (thank you BlackHat) & grabbing a delegate bag, and finally working my way through a big cup of coffee, day 1 really started for me with Jeff Jarmoc‘s talk.

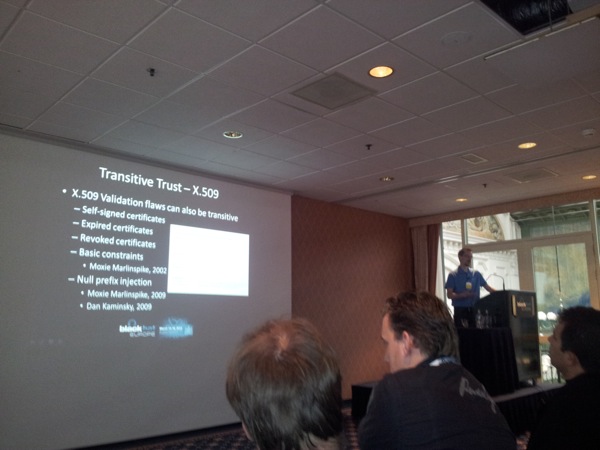

SSL/TLS Interception proxies and transitive trust

Jeff, a senior security researcher at Dell SecureWorks starts off with a brief history of SSL/TLS.

He explains that the major properties or goals of encryption are to protect privacy, integrity & authenticity. Although SSL was introduced by Netscape in 1994, and transformed into TLS in 1999, the term SSL is still commonly used.

He continues with explaining briefly how SSL sessions work. Basically, client and server have to agree on a cipher suite to use, and the server presents a certificate to the client. Next, the client initiates the ClientKeyExchange, and the server responds with ChangeCipherSec+Finish messages. From that point on, the session is active. A variation on this process is “mutual authentication”, where the client also sends a certificate to the server. Jeff explains that this causes issues for interception proxies because intercepting that type of session tends to break the client-server communication.

In order for a session to be established, X509 certificates need to be validated, he continues. There are a couple of components that are important in this validation process :

- certificate integrity (compare the signature of the certificate with the cert hash)

- check for expiration (issue time < current time < expiration time)

- check issuer (trusted ? follow chain to root)

- revocation (CRL or OCSP)

As a result of a successful certificate check & session setup, we assume that

- privacy is protected (cipher suite prevents sniffing)

- integrity is protected (cipher suite prevents modification)

- authenticity is protected (certificate validation ensures identity)

At the same time, Jeff explains, there’s an increase in malicious use as well, trying to take advantage of the security features encryption provides :

- cipher suite will prevent detection (privacy)

- cipher suite will bypass prevention (integrity)

- certificate validation guarantees authenticity

In other words, attackers know that the use of encryption might bypass certain security layers and take advantage of valid certificates and SSL sessions to do so. Enterprises responded to this problem by adjusting their tools.

Interception proxies

These tools make use of some kind of man-in-the-middle (mitm) technique to inspect & filter out any bad traffic inside the encrypted sessions. As a consequence of this, instead of having just one SSL session between client and server, there will be 2 sessions: one between client and interception proxy, and another one between interception proxy and server. This effectively allows the interception proxy to see the traffic in clear text and inspect it. At the same time, this disrupts authenticity.

In order to avoid certificate warnings on the client side, the client is often configured to trust the certificate that is placed on the interception proxy, used to secure (intercept) traffic between client and interception proxy. In a lot of cases, this certificate is some kind of built-in certificate, so companies often need to implement some kind of certificate management to make sure the communications can be really trusted.

In order to deal with that, companies either use some kind of private CA (which may be tough to manage depending on the size of the infrastructure and IT staff to support it), or use public CA services.

Once this certificate issue has been fixed, an additional set of problems or risks present themselves.

Risks introduced by interception

The first issue is the fact that there are now two separate cipher suite negotiations. The end result may be that the session ends up using a weaker crypto than endpoints support.

At the same time, the interception proxy becomes a high value target. If the proxy gets compromised, the attacker would gain access to clear text sessions, which may contain private keys. Jeff also explains there might be potential for legal issues as well. Do users need to know that their encrypted sessions are in fact intercepted and can be viewed in clear text somewhere?

Finally, and this is probably the biggest issue, there is a transitive trust issue. The client can no longer independently verify the server identity.

After all, the client is not making a direct connection with the server anymore… and the certificate installed on the interception The interception proxy is trusted by default… it’s often put in place by the local IT organization. Depending on how the proxy is configured, any case where a server certificate might be invalid or flawed, the client might still think everything is fine (because it only sees the session with the proxy). In other words, the flaws might become transitive. You can check out Jeff’s slides & whitepaper for more information on what types of flaws might be present.

Failure modes & impact to risk

If a certificate is invalid, what does the device do ? How will the device behave ? There is no RFC specification for MITM interception, but there are 3 common approaches, Jeff continues:

- Fail closed : Connection is prevented, webbrowser shows a “unable to connect” message (which is not really very user friendly)

- Friendly error : Connection is terminated, but the proxy shows a friendly message on the client side (using the existing session), showing some information on why the site was blocked (using the correct context). In some cases, the error message may show a “click here to continue” button, so the user can override. Jeff says that, in some cases, clicking the button will allow traffic for all sessions, not just the current session.

- Passthrough : This is the most common behaviour for name & expiry failures.

It is clear that each of these methods has trade offs.

Tools to test

A great tool to test the behavior and detect potential issues was put together and made available for the public at https://ssltest.offenseindepth.com. If you visit that site from a client that sits behind the proxy you’re trying to test, a series of tests will be performed and a table will be displayed with the results of each test.. If you compare the results with the results when connecting directly to the test server (without a proxy), you can see if your proxy is introducing issues or not. (Jeff mentions that, in order to make the test as realistic as possible, you’ll need to add the Null cert CA to your trusted root CA list)

Cases

During Jeff’s tests, he discovered a few proxy interception products that introduced some issues. One of the most important products was Cisco Ironport Web Security Appliance (v7.1.3-014).

Not only did the the default configuration settings allow Self-signed certificates and unknown CA roots to be accepted automatically.On top of that, there was a lack of CRL/OSCP checking, basic contraints not being validated, and keypair cache weaknesses. Jeff explained that the issues have not been patched yet, but Cisco is working on making a patch available by july 2012

From a management perspective, he also discovered that the Ironport appliance does not allow to change the root CA’s to trust… There simply is no UI for managing root CA’s, which means you can’t add your own root CA, and you have to trust Cisco on who to trust. A second product Jeff discussed is Astaro Security Gateway. This appliance (now owned by Sophos) doesn’t check CRL/OCSP. Sophos told Jeff that they decided not to check CRL/OCSP because they believe the CRL/OCSP model is broken in general. Someone in the audience mentioned that Google is also changing their approach to certificate revocation, shifting away from CRL/OCSP and moving to client updates (adding certificates to blacklists)

Not all is bad though. Luckily, Astaro has a friendly error failure mode, and has support to manage root CA’s, managing certificate blacklists, and gets updated frequently. Furthermore, during his tests, Jeff noticed that both Checkpoint Security gateway R75.20 and Microsoft Forefront TMG 2010 SP2 looked fine and acted properly.

Recommendations

Jeff finished his talk by presenting some recommendations, including :

- patch regularly

- test proxies before deployment

- make sure security & end-user experience go hand-in-hand

- inform end-users of interception

- be aware of trust roots

- perform proxy host hardening & monitoring

- think about failure modes (and impact on user experience)

- realize that interception has consequences

If you’re involved with developing some kind of interception proxy : please make sure you allow admins to manage trust roots and optionally blacklist individual certificates. Also, make the default settings secure, test your system under attack scenarios, be wary of aiding attacks against authenticity, provide a way to deploy updates & patches… and secure your private keys !

I really enjoyed this talk. It kept a good balance between some technical aspects of using SSL, the concepts and issues with interception proxies, both from a technical point of view and from a user/legal point of view (which are often overlooked in technical presentations). Good job Jeff!

PS : After talking with Jeff over lunch, he mentioned he would like to get more test results from the community… so if you have a device that does SSL/proxy interception, please get in touch with him to get more details on how to properly set up the test & get him the information he needs.

FYI, You’ve got LFI

The second talk I attended today, after having lunch with Xavier Mertens, Didier Stevens and his wife Veerle, Frank Breedijk and Jeff Jarmoc…, was “FYI, you’ve got LFI”, presented by Tal Be’ery, who is the Web Security research team leader at Imperva.

Background

PHP is all around and by far the most popular server-side dev language. Statistics show that PHP is used in about 77% of the cases, and a lot of major companies (such as Facebook, Wikipedia, Yahoo, etc) make use of it. At the same time, Tal continues, exploiting it often leads to full server takeover. Today, hackers are actively attacking php based RFI/LFI bugs… Where Owasp ranked this type if attack on place #3 in 2007, they appear to have dropped it from the top 10 list in 2010… Does that mean the problems are gone ? Of course not.

In order to better understand the problem, he continues his talk by explaining some PHP internals

PHP internals

The parser starts with html mode, he explains. It looks at the source, ignores everything until it reaches a php opening tag. (

RFI

The include() statement is often used to share common code snippets (such as a standard header & footer in your website). Starting at PHP >= 4.3, you can use remote files as a valid target for the include statement. When the file gets opened by the parser, it will drop to html mode by default (so it will ignore everything until it reaches a php tag). So, if you thought eval() was bad, Tal continues, include() is eval()’s bulimic sister. It not only evaluates arbitrary code, it also eats html placed before the code. He used a little script to demonstrate the issue :

test.php

include $_REQUEST['file'];echo "A $color $fruit";?>The ‘file’ argument allows you to feed it your custom script, inject it and get it executed on the server.

Before looking at the TimThumb exploit, he introduces HII (Hackers Intelligence Initiative), an Imperva team which was initiated in 2010 and is used to observe & tap in on exploits used in the wild. He explains that, with what they captured in the wild, they noticed the timthumb exploit is a special case and abused multiple flaws to work properly.

- bypassed “include only from valid domains” list (hostspicasa.com.moveissantafe.com was allowed if picasa.com was in the list)

- upload pictures only (they used a php script that starts with a valid GIF file header Since include() ignores everything until you reach a php tag, this was not an issue.

Advanced RFI with PHP streams

Streams are a way of generalizing file, network, data compression and other operations, such as http:// ftp:// file:// data:// and so on

From the hackers perspective, he may not be in a position to host the shell code somewhere, so he can try to reproduce the file contents and use for example a data:// stream to read and execute it. Since it’s not using a commonly used stream, it might be able to bypass filters that block certain protocols/signatures. Using compressed/base64 data, the attack source can be hidden.

LFI

RFI is disabled by default. php version 5.2 has the following default php configuration : allow_url_include = off… Based on statistics, 90% of the PHP deployment versions are >= 5.2.

With LFI, the malicious code must be stored locally.T he 2 main options to achieve this is

- abuse existing file write functionality (log files, …) – maybe by storing code in http headers (if you can get your php code to be saved in a log file, and you can include the local log file => win)

- abuse upload functionality. If improper filtering is performed, a file which includes malicious code may be written onto the server and then included. In some cases, if you’re trying to hide the code inside a picture, you’ll need to make sure the picture can still be displayed, and the code doens’t get detected by AV. You can try to use metadata fields (exif properties) to store the content… this will meet the first requirement, but still a (lower) number of AV engines recognize this. By splitting the command and putting the pieces into adjacent property fields, only one AV engine recognized the malicious picture. Tal explains that ClamAV appears to be looking at 3c3f706870, which is hex for

In order to detect abusive LFI, Tal explains that you can use VLD to compile the file, inspect the opcodes and make sure it is not going to execute anything. Only an “ECHO” instruction would be allowed… other commands may indicate valid php code may be present and thus the file should not be executed.

LFI/RFI in the wild

Today, 20% of all web applicaiton attacks are LFI/RFI based. (based on the figured captured by the HII systems). LFI is more prevalent (because RFI is usually disabled by default today)

He finishes his presentation with presenting a possible new approach to blocking RFI/LFI.. Since the sources & shell URL’s in the exploit codes are often stable/resused for more than a month, with help from the community, it would be possible to detect, gather data and report it in a central place, so black lists can be created & used.

Fast paced talk.. a creative/original demo would have been nice to keep the audience sharp, right after lunch. Nevertheless, good work.

A Sandbox Odyssey – Vincenzo Iozzo

The main reason I’m interested in this topic has to do with the fact that, as an exploit developer, we know that we often have to resort to additional layers of protection… not to prevent exploits from happening, but to mitigate the effects and contain the execution of arbitrary code.

In this talk, Vincenzo focuses on sandbox implementations in OSX (Lion).Vincenzo starts off by mentioning that older versions of OSX lacked a lot of security features, but that things have been rectified in the meantime. With newer versions of IE, Adobe Reader, Google Chrome, etc, the use of sandboxes has increased significantly over the last few years.

He then references “the apple sandbox” white paper (Dion Blazakis) which explains the sandboxing technology “MAC and TrustedBSD” implemented in OSX. It becomes clear that the implementation allows quite some flexibility (and thus complexity), by for example allowing regular expressions in certain policies.

In short :

- A module registers to the MAC framework

- Each module has policies hooks

- When an action needs to be performed, the hooks are queried to ask for authorization.

- If any of the hooks says no, then access is denied.

The nice thing of the MAC framework is that it allows a great deal of granularity. It can restrict access to a variety of components, including :

- File access

- Process mgmt

- IPC

- Kernel interfaces access

- Network access

- Apple events (which should, for example, allow you to prevent that a script tells Finder to execute an app)

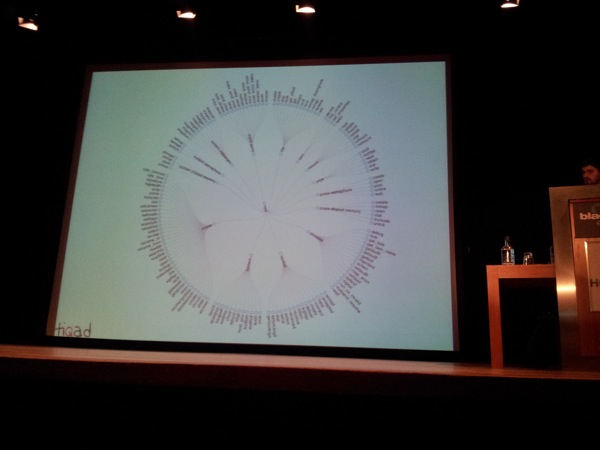

The following drawing demonstrates the complexity & granularity of the sandbox:

Some of these actions are not checked at all (mach ports, etc)

When trying to write code to escape from the sandbox environment, Vincenzo says, these are often the approaches :

Sandbox escape ideas : 1. ideal scenario

- look at the available kernel surface

- mess with IOKit interfaces… “jailbreakme.com” : use a remote exploit in a browser to get further access and escape from the sandbox

Idea 2 : “shatter” attacks

- can you convince/force launchd to do stuff for you ?

- can you convince/foce Mach RPC servers to do stuff for you

- what about Applescript ?

He briefly explains what launched is (a very powerful userland process – daemon responsible for spawning processes, etc) and mentions that in today’s versions of OSX, additional checks have been implemented to prevent abuse.

mach RPC servers : He suggested to read “Hacking at Mach Speed” (Dino)

applescript : used to allow multiple processes to talk to each other thru apple events

What can be done in reality ?

Profiles : these are usually pretty big. Vincenzo looked at various profiles and noticed some of them are quite big… they usually start with “deny all” and then “allow certain things”.

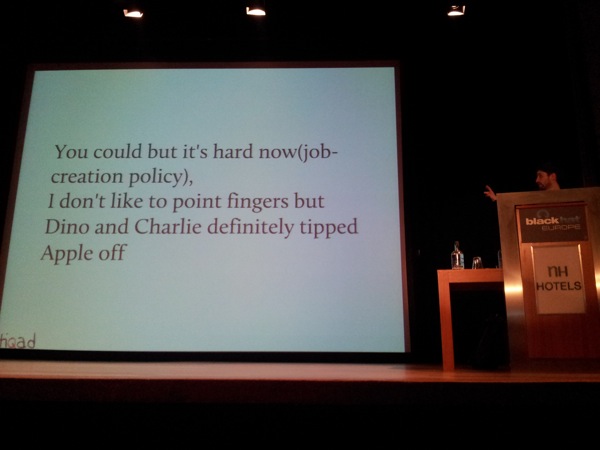

He also looked at the default sandbox settings for applications from the AppStore. He mentions that, by default, things such as fork are possible, and there would be a way to allow the download&exec of payload. Chrome, as an example, did a good job, by – for example – preventing access to iokit altogether.

Anyways, default settings often allow

- execute files

- write to small parts of the file system

- read bigger parts of the file system

- reply to applescript requests

- etc

Vincenzo continues with demonstrating how having network access would already allow you to for example read the keychain from the user homefolder and copy it

Apple wants to make sure transition from non-sandboxed apps to sandboxed apps go smoothly. They implemented “temporary exceptions” that will allow a lot of stuff, to make sure things don’t get terribly broken.

- com.apple.security.temporary-exception.apple-events

- com.apple.security.temporary-exception.mach-lookup.global-name

- com.apple.security.temporary-exception.files

The reality is that these temporary exceptions make the sandbox a lot weaker.

The MAC framework is pretty solid, but the success depends on policies and configurations.

Food for thought : irony of browsers & sandboxing

People tend to move to the cloud (data, email, etc)… At that point, sandboxes become pointless because there is no need to escape from the sandbox anymore. Since data is accessible from within the webbrowser (and not stored on the local filesystem), sandboxes become pointless.

credentials & cookies : HTML5 Application Cache may be used to show information that should be fetched from the server.The application cache is needed to allow users to run certain apps when they are offline. Safari only has one application cache for all apps (sqlite database). Again, you don’t need to get out of the sandbox… just corrupt the cache, which forces the client to fetch the data again… mitm that, and steal the info. Or – if you can write to the db, you could inject a fake entry for a certain cache entry and use it to steal cookies, he explains.

Finally, there’s the problem of data separation. Apple wants to force the use of an API to let applications to communicate to each other. Instead of allowing the app developer to implement (and secure) his own IPC protocol to let child sandboxed processes talk to the parent process, Apple wants developers to rely on code written by Apple and would potentially allow full access to processes if a flaw is discovered and abused.

This was a rather technical talk, but Vincenzo managed, to explain things well, and make it easy to understand… He most certainly knows what he is talking about. He has no doubt that sandboxing is a good idea, but also mentions Apple might need to consider making substantial changes to their core framework to make things more secure.

The most important take-away from this talk is that we’ll have to keep in mind that sandboxing your browser won’t matter in the future. After all, hackers don’t need to get control over the machine… they just want the data, which is usually accessible from the browser, inside the sandboxed process…

Hacking XPath 2.0

The last presentation of the day was titled Hacking XPath 2.0, presented by Sumit Siddharth & Tom Forbes of 7Safe. After introducing themselves, both protagonists explain what XPath is : a query language for selecting nodes in a XML document… something like “sql for xml”.The first version of the XPath standard was release in 1999, the second version was release in december 2010.

Next, Sumit explains how a typical XPATH XML document is structured – starting with a root node, over nodes, to node names, node values and comments. XPath is used often because it allows you to query basically anything you want (complex queries) in the XML datasets, even providing an easy way to perform joins and unions.The XPath 2.0 version adds new functions which make it even more powerful.

The main issue with XPath is the fact that the XML structure can still contain unvalidated input, which is then processed in the XPath query. This can causes issues that are very similar to SQL Injection, and lead to Authentication bypass, business logic bypass or even execution or arbitrary data from the xml database.

Sumit also mentions that a lot of automated web pentesting tools are unable to find XPath injection vulnerabilities. He demonstrated some additional checks that can be performed to find and exploit XPath injection issues, even if you are faced with some kind of “blind” injection scenario.The difference here is that the source is actually not a SQL database, but an XML document.

Exploiting XPath Injection in an automated manner

In order to exploit XPath injection, a set of useful functions are presented :

- count

- name

- string-length

- substring

Using those functions, they explain, it’s possible to reproduce the XML structure, attributes and their values (which would require some bruteforcing, one character at a time, and monitor responses… but it most certainly is possible)

Using the comment() function, it would even be possible to read comments from the xml database source.

Putting all of that together, they explained the steps needed to automate XPath Injection to dump the contents of the XML database.

XCat, a python script, will allow you to exploit a blind XML XPath Injection scenario and extract/reconstruct an XML document : This tool is free / open source and can be downloaded from https://github.com/orf/xcat

The script is based on bruteforcing characters… but with enough time, it will allow you to dump the entire database/document. Using some additional XPath 1.0 trickery (using the substring-before function), they should be able to speed up the process significantly (and implement this technique in xcat).

XPath 2.0, the latest edition of the standard, has a lot more functions which can be helpful in the process of exploiting XPath Injections (or at least speed up the process of bruteforcing)From an automation point of view, there is a way to even detect if the target is using XPath 1.0 or 2.0, by using a function that was added in XPath 2.0 (and doesn’t exist in 1.0) : lower_case(‘A’)

On top of that, Path 2.0 has an error() function which can be used to perform error-base bling attacks.

Unfortunately, Sumit & Tom say, XPath 2.0 doesn’t seem to be widely supported or used yet.

Tom continues with explaining that the new doc() function (available in 2.0) would allow you to do “cross file” joins and read arbitrary xml files locally or remotely.This not only allows you to read the file, but you can also use it to connect to a HTTP server and dump the contents of the file you want to read. This will speed up the process of dumping an XML document.

Another function, getcwd(), allows you to get the absolute path to the xml file.

XQuery

Next, they elaborated a bit on XQuery, which is a super set of XPath… it is a query language and a programming language, supporting FLWOR expression : For, Let, Where, Order by and Return. Obviously, XQuery is also susceptible to injection, providing the attacker with a lot more tools to extract data from the XML database. In fact, it would be possible to dump the entire database with one big XQuery.

Worst case, even if the backend parser used doesn’t work, you can still rely on the XPAth 1.0 features and dump the database (in a slower fashion).

eXist-DB

Finally, a few words are mentioned about eXist-DB, which is a native XML database (not a flat file). Using the doc() function, they explain, database calls can still be executed within XPath and the results can be retrieved. Using the HTTP Client feature, enabled by default in the eXist-DB engine, you might even be able to retrieve the entire XML with a single query, by instructing the database to make an http client connection to a server under your control (and dumping the content posted by the eXist-DB.

This talk demonstrates that all input, whether it’s used in a sql query, sql query or showing something on the screen, must be filtered and treated as potentially malicious.(Whitelisting please !)

Using parameterized queries (separating data from code) and limiting the use of the doc() function are 2 other recommendations provided by the presenters.

As of now, the current XPath functions won’t allow you to update the XML data or writing to the filesystem, but future versions/functions may provide a way to do this.

Good talk, fast paced and lots of information.. Good job.

This concludes my first day of briefings at BlackHat 2012 – time for some networking/social activities with old & new friends :)

See you all tomorrow !

Other blogs covering BlackHat EU 2012 :

http://blog.rootshell.be/2012/03/14/blackhat-europe-2012-day-1-wrap-up/

© 2012, Peter Van Eeckhoutte (corelanc0d3r). All rights reserved.

Pingback: URL