3,988 views

BlackHat EU 2012 – Day 3

BlackHat EU 2012 – Day 3

Good morning,

Since doing live-blogging seemed to work out pretty well yesterday, I’ll do the same thing again today. Please join in for day 3 at BlackHat Europe 2012, in a cloudy and rainy Amsterdam.

The first talk I attended today was :

“Secure Password Managers” and “Military Grade Encryption” on Smartphones

Andrey Belenko and Dmitry Sklyarov, both from Elcomsoft Co.Ltd (Moscow, Russia), start their presentation by thanking the audience for making it to their talk, after partying last night :)

In this talk, they will present the results of their research on analyzing the security of password manager applications on modern smartphones.

Agenda :

- Authentication on computers vs smartphones

- Threat model

- Blackberry password managers

- iOS Password managers (free vs paid software)

- Summary & Conclusions

Authentication

On PC’s, there typically are a number of options available to developers, Andrey says :

- Trusted Platform Module, often supported by the hardware

- Biometrics, fairly easy to implement today (something you are)

- Smartcard + pin (something you know and something you leave at home) : often used in corporate environments

- Password/Passphrase : still the most popular one, which explains why Elcomsoft is still in business.

On smartphones, the situation is different. There usually is no TPM, biometrics are not available and neither are smart cards. In other words, we usually rely on passwords for authentication. Andrey explains that some smartphones have some kind of processor that might be helpful to further secure authentication, but access to this component is only allowed for the system, and not applications.

Passwords are usually either pins, or lock patterns (which are essentially numeric combinations).

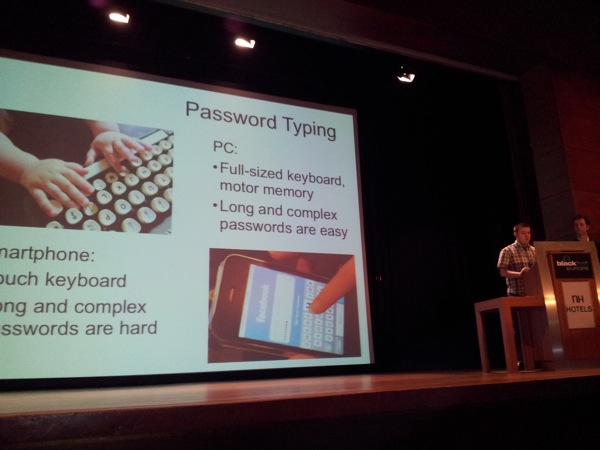

Andrey explains that, because of the limited keyboard size on smartphones, passwords usually are pretty weak on smartphones, compared to what people use on a PC.

From a password cracking perspective, the actual process or cracking passwords is usually offloaded to other devices (PC’s with GPU’s, etc). Cracking passwords on a smartphone is uncommon because of the weak CPU performance.

Finally, on PC’s, users usually only log into the workstation just once. On smartphones, people have to unlock their device a lot more often. Handling passwords on a smartphone is much more difficult. Because of the nature of the device, a smartphone really requires stronger password protection, but in real life it provides less capabilities to do so.

Threat model

Some assumptions :

The attacker has

- physical access to the device; or

- backup of the device; or

- access to the password manager database file.

The attacker wants to recover the master password to open the password manager on the mobile device and extract the passwords.

It’s pretty realistic to state that smartphones get lost of stolen quite often, so physical access is a very likely assumption to make.

iOS devices need a device passcode or iTunes pairing in order to be able to create a backup. The device needs to be unlocked to backup. There is optional encryption available (enforced by the device) : PBKDF2-SHA1 with 10K iterations on iOS (which is done properly, according to Andrey).

On Blackberry devices, a device password is needed to create a backup, and there is optional encryption available (but it’s not enforced by the device). Encryption is done via PBKDF2-SHA1 with 20K iterations (even more than iOS), but again, it’s not enforced, it’s handled by the Desktop application instead.

With respect to accessing the database file itself :

On Apple iOS :

- via the afc protocol (needs a passcode or iTunes pairing)

- via ssh (jailbroken device).

- via physical imaging (up to iPhone 4)

On Blackberry

- you need the device password (even for physical imaging)

Blackberry Password Managers

Blackberry Password Keeper (included with OS 5)

- Key is calculated using PBKDF2-SHA1 (3 iterations) / PKCS7 padding to fill the last block. The padding value contains the number of bytes of padding.

- Password verification is pretty fast

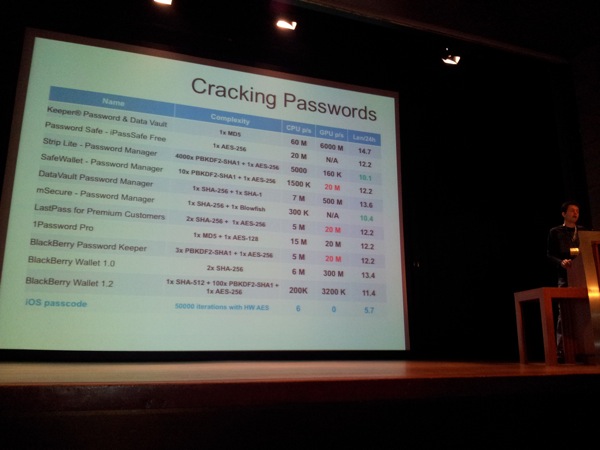

- 3 x PBKDF2-SHA1 + 1 x AES-256

- 5 million passwords can be checked per second on a CPU, about 20 million passwords can be checked with a GPU

Blackberry Wallet

Version 1.0 (OS5)

- Stores SHA-256 (SHA-256(password))

- Password verification requires 2 x SHA-256

- Very fast to crack : 6 million on a CPU, about 300 million on a GPU

- No randomness, no salt… so you can use rainbow tables to crack

Version 2.0 is better

- Similar to Password Keeper

- First, creates hash with SHA-512

- PBKDF2-SHA1, nr of iterations is random (50 .. 100)

- 200K passwords on CPU, 3.2 Million on a GPU

iOS Password Managers

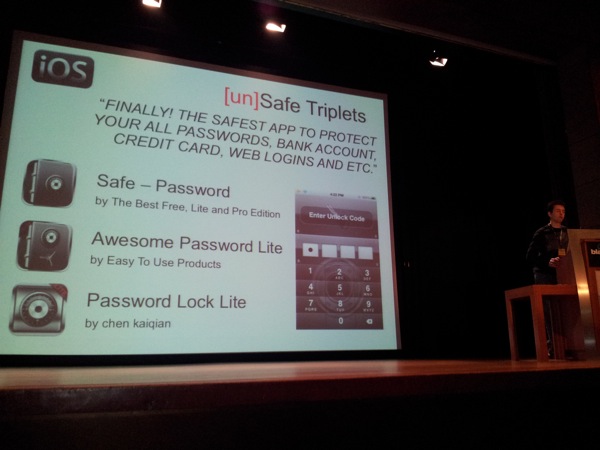

Dmitry explains how the iOS Password Managers work. He explains that they used a simple AppStore search to find password keepers / password managers, found a LOT of applications and tested a couple of them.

Free apps :

They discovered applications that didn’t use any encryption, stored passwords in sqlite databases and only have a 4 pin master password/master passwords stored in clear text. (iSecure Lite, Secret Folder Lite, Ultimate Password Manager Free).

Another application they tested, “My Eyes Only”, stores data in NSKeyArchiver files, encrypted with RSA. It saves the public & private key in the keychain with attribute kSecAttrAccessibleWhenUnlocked. The length of the key is only 512 bits, and Documents/MEO.Archive, Dmitry explains, holds the RSA encrypted master password. The private key is saved inside the protected data file.. (??)

Keeper Password & Data vault claims to use military-grade encryption. It stores data in a sqlite database. The md5 of the master password is stored inside the database as well. The sha1 of the master password is used as AES key. Putting all of that together (and the fact that no salt is used for the MD5), this allows for cracking of about 60 million keys per second on a CPU, or 6000 million on a GPU. (or use rainbow tables)

Password Safe (iPassSafe) stores data in a sqlite database, and prevents the usage of “weak” passwords. A random master key is used for encryption. Passwords are not hashed, only null padded (PKCS7 padding allows to reject wrong keys). Cracking is very fast (only requires one AES-256 attempt), and there is the option to build & use rainbow tables.

Strip Lite uses a sqlite database file encrypted using open-source components sqlcipher developed by Zetetic (the company that wrote the password manager). Dmitry explains that this was by far the most resilient app for password storage. Still possible to crack, but would be much slower compared to other applications.

Paid apps :

So, do paid applications offer better protection ? Dmitry performed a Google search and looked for good reviews for password manager applications

SafeWallet has versions for Windows, Mac, iOS, Android, Blackberry. The database format is common for all platforms. Master key is encrypted with the master password and data gets encrypted with AES-256, PKCS7. Password verification is fast, testing speed is pretty fast too.

DataVault : data encrypted by the master password and stored in the device keychain. Master password is not hashed, only padded. a SHA-256 of the master password is stored in the keychain. “Keychain is used, so it should be hard to get the hash to brute force the master password, right ?” Unfortunately, in IOS4, the keychain is a sqlite database. The data column is supposed to store passwords and is always encrypted… but DataVault stores the hash in the comment column (not encrypted). iOS5 encrypts all keychain items and stores a hash of the plaintext value to facilitate fast lookups :) In other words, it’s still trivial to do a brute force attack SHA-1(SHA-256(password)))

mSecure encrypts data with Blowfish. The master key is SHA-256 of the master password. attack speed is relatively slow (because there is no Blowfish optimization on GPU’s yet)

LastPass : ‘Cloud’ service, stores your information somewhere in the internet. Local storage gets created after first login. THe master key is SHA-256 of username+password. Attack speed is pretty fast because there is only one iteration.

1Password Pro : Versions for MAC, Windows, iOS, Android. This seems to be a very popular application. There are 2 protection levels : master PIN and master password (depending on the type of data). Data is encrypted with AES-128, the key is derived from the master PIN or master password. The database key encrypted on itself is stored for PIN or password verification. It uses PKCS7 padding which allows to reject wrong keys. You don’t need the IV because you only need to decrypt the last block (IV = size of the last block). Cracking is pretty fast (15M on CPU, 20M on GPU)

SplashID Safe has version for various platforms and is a very popular application. on iOS4, data is stored in sqlite. Sensitive data is encrypted with blowfish and the master password is used as a Blowfish key. Because of lack of GPU optimization, cracking of blowfish is slow. The master password is encrypted with… some key… looks like a random key or so… well, after analyzing, they discovered it’s in fact a fixed key. This allows for instant decryption of the master password and decrypt all data almost immediately. Ouch !

Summary & Conclusions

On iOS4, the passcode is involved in encryption of sensitive data. Passcode key derivation is slowed down by doing 50K iterations. Each iteration needs to talk to hardware AES, so only 6 passwords can be checked per second on iOS4. It can’t be performed off-line and scaled. It will take more than 40 hours to crack all 6-digit passwords.

So, putting things together :

- None of the tested password keepers offer something better than is already present in the OS.

- Using them on improperly configured devices may expose sensitive data

- Paid applications are not necessarily better than free ones. During their tests, they discovered the best one was a free one.

Some additional hints :

Users :

- always use passcode on iOS

- set backup passwords (a complex one)

- Do no connect your device to an untrusted device (not even a charger station !)

- do NOT jailbreak the device, it will make it a lot less secure because some security checks are bypassed/no longer used

Developers :

- Use built-in OS security services

- Don’t reinvent or misuse crypto… Really, don’t do it

Apple vs Google Client Platforms

FX begins his presentation by crediting 2 more people he worked with to build the presentation. He explains that they wanted to look at newer client platforms and different approaches used in those platforms, in terms of architecture, software sources, cloud, etc.

Their research focuses on iPad and Google Chromebook.

First of all, he explains that the iPad generates a lot of money. Apple made the iPad to increase revenue and based on the statistics, it worked.

With that in mind, from a design perspective, Apple wanted to provide

- consistent, fluent and simple user interfaces

- integrity protection of the OS & updates

- restriction of third party software

- protecting data is NOT a design goal (because it doesn’t generate any money)

FX mentions that you may want to sign up as a developer on AppStore and look at the signup process and read the contract… you’ll notice some interesting statements such as

- you may be denied access for pretty much any reason

- all rights go to Apple

- Apple has a limited liability (50$)

Signing up costs $99

Google, at the other side of the equation, built the Chromebook because they want to have a platform to display ads. This is a totally different approach and motivation. It’s built to run a single application, a web browser. It’s not designed to run third party apps or store data locally, but it still encrypts all traffic and any local data. It has a fast startup and is built to appeal the heart of nerds, FX says, by providing an open and transparent development of the client platform.

The Google Web Store has a simple sign-up process. It only costs $5 (one time). It allows for direct submission of content, no delays.

Apple details

From an iPad Security Architecture point-of-view:

- Standard XNU (Mach + BSD). It has kernel & user processes, only one non-root user “mobile”. It’s not designed to be shared.

- Additional kernel extension “Seatbelt” which provides profile controlled security

- Current versions have ASLR and DEP.

Integrity protection : Protected Boot. (of course, this does not protect issues in the initial bootloader). FX explains that there is a buffer overflow in the ROM boot loader (needed for USB handling – required for recovery boot), which cannot be fixed.

He continues by explaining that the Apple bootloader doesn’t seem to check X509v3 basic constraints. In theory, you would be possible to use the private key stored on the device (used for push notification) to sign arbitrary firmware… but because of some issues in the bootloader code, it didn’t seem to be possible to actually abuse it.

They also discovered that the userspace TLS libraries reveal that X509 basic constraints are not checked either. He explains that it was possible to abuse this to perform a mitm for ssl protected websites.

Binaries are signed with a Mach-O signature. The kernel verifies the integrity of applications and libraries. Signatures are embedded n the Mach-O binaries. FX shows a few examples on how this can be exploited.

Updating the iPad :

- System Updates : covered by the integrity protection mechanisms.

- Application Updates are supplied by the AppStore

- PLMN Carrier updates : carrier bundles can be pushed OTA to your device. It can set APN, proxies etc. It needs to be Signed SomeHow… certainly an area interesting enough to perform further research

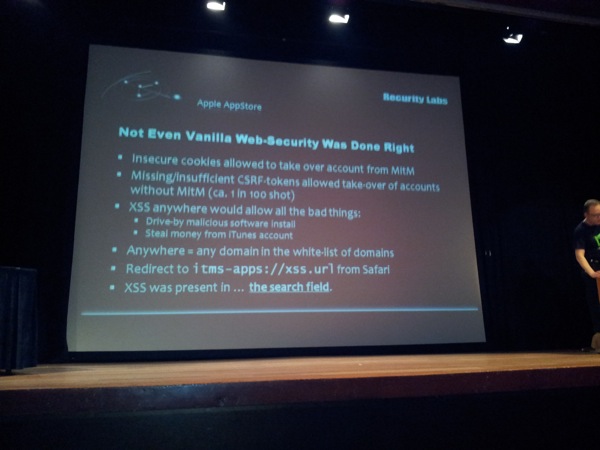

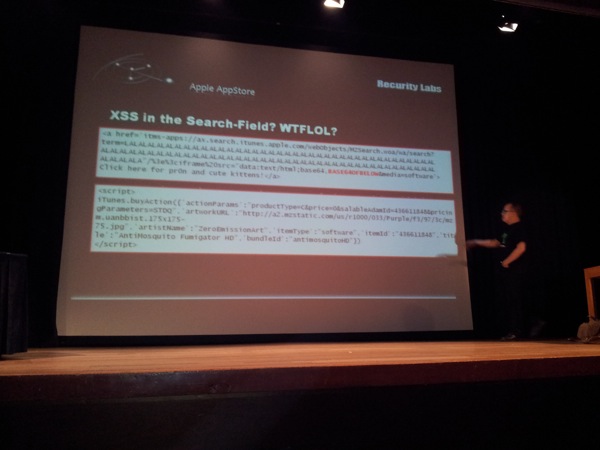

Apple AppStore Architecture

The AppStore / iTunes clients are based on WebKit and have a number of flaws (pop-up issues, MITM, CSRF Tokens are ignored, same origin policy bypass), some of which are pretty hard to fix. One of the examples FX demonstrates is displaying the “enter your apple-id” window which looks like the real one, but is in fact javascript code, written to steal credentials. Combine that with issues such as XSS (yes, the search field had XSS – WTFLOL)… perfect scenario to download + install + run custom software. Apple “fixed” it, by fixing that particular XSS in the search field… :(

Apple pretty much controls your content and forces you to go to the AppStore and push arbitrary apps to your device.

Google – the ChromeBook Security Architecture

Access to the device is based on Google Accounts or a Guest account. It’s designed to be shared. It uses eCryptFS to encrypt user data (separately).

It runs a Standard Linux OS, has iptables firewall, etc.

The only thing you’re supposed to be running in the Chrome browser (and uses process separation & sandboxing mechanisms) If you put the device in Developer mode, you’ll get a big warning.

It hides the file system from the user using a hard coded list of allowed file:// URI’s, but apparently Adobe Flash seems to ignore that list.

Pretty similar to what Apple does, Google implemented a chain of checks during boot to protect integrity of all components. The file system integrity protection, FX continues, was implemented pretty well, and is based on hashes and eventually one hash of the bundled hashes. Unfortunately, the partition table is not integrity protected, and neither are the OEM and EFI partitions. There’s a theoretical breach of integrity protection chain through a kernel argument format string.

Also, there’s a HW Assisted Backdoor in the device. Although the read only initial bootloader is… readonly, it can be made writeable by opening the device and reprogramming the (well documented) chip. You can replace the boot stub & run arbitrary firmware without getting any warnings.

Chromebook checks for updates every 45 minutes. Updates are incremental modifications to firmware and root partition (which is possible because of the block device model). All traffic is TLS/SSL protected, but there are some issues with the fact that the entire process is online and only gets verified at the end. If something goes wrong in the meantime, you may end up with a bricked device.

The Google Web Store distributes Browser-Extensions and “Downloadable WebApps”. The Chrome-Browser exposes additional API and capabilities to these local JavaScript extensions, depending on permissions (user granting permissions on install). The extensions are partially free, partially paid for, but always come with source. Paid extension are sold via Google Checkout, so you’ll get a link with an ID to download the extension at the end. Interestingly, FX says, the same links (with unique ID’s) are also used for closed user group testing. If you know the App-ID, you can download & install the app.

In theory, a mitm attack or an API can allow someone to install a custom malicious Extension to the device. Google Sync can sync all installed Extensions to a Google Account. So – if the account is pwned, the browser can be pwned as well.

A lot of the security model obviously relies on the Google Account. If your account is compromised, all is lost. If your session cookie is compromised, your account is compromised. Remember, we are dealing with a model that relies on storing stuff in the cloud. If Google doesn’t like for whatever reason and closes your account, you’re screwed too. If you need Google to close your account because it was hacked… good luck, they might not care about it (even if you’re HBGary Federal) Other than that, feel free to use the cloud :)

Since Google relies on the browser and thus sessions, it’s important to understand that the entire model stands or falls with the security if your session.

On a sidenote, FX explains that macros in Google Docs get executed server-side. You can import macros written by someone else. The user initially approves the script and it runs. Sure, you can inspect the code if you want to… but if the author decides the change the code at any time and introduces some malicious code, you’ll never know about it.

There’s another issue with allowing 3rd party services to access your data. You basically authorize the exchange of data, but it’s possible to use some token/redirection trickery (Google “I feel lucky” anyone) to spoof a page that would for example ask you to allow www.google.com to get access to your data, but in the back end, it’s using the auth token for another domain/app (which gets used during the redirection)

To sum things up, both Apple and Google want money. Relying on a cloud client platform only is putting all eggs in the basket, and you have to realize it’s not your own basket. Based on the research and the fact that these are big companies, that know what they are doing, have Web-Security bugs. The way certain things were designed (Apple vs encryption), it’s clear that security is not their primary concern.

GDI Font Fuzzing in Windows kernel for Fun

The presenters, Lee Ling Chuan and Chan Lee Yee, from the Malaysian CyberSecurity agency, part of Mosti (Ministry of Science, Technology and Innovation) explain that there are 2 groups of categories when it comes down to fonts : GDI Fonts & Device Fonts.

There are 3 types of GDI Fonts in Windows : raster based, vector based and TrueType/OpenType

a raster font glyph is used to draw a single character (bitmap). Vector fonts are collection of lines. TrueType/Opentype is a collection of lines and curves.

A truetype font (.TTF) file contains data (table) that comprises a font outline. This TTF table contains other tables (EBDT, EBLC and EBSC). The rasterizer uses a combination of data from different tables to render the glyph data in the font.

- EBDT – Embedded Bitmap Data Table : stores the glyph bitmap data. It begins with a header containing the table version number (0x2000). The rest of the data is the bitmap data.

- EBLC – Embedded Bitmap Location Table : identifies the size & glyph range of the sbits & keeps offsets to glyph bitmap data in indexSubTables. The table begins with a header (eblcHeader) and contains the table version and the number of strikes. The eblcHeader is followed by the bitmapSizeTable array(s). Each strike is defined by one bitmapSizeTable.

- EBSC – Embedded Bitmap Scaling Table : allows a font to define a bitmap strike as a scaled version of another strike. It starts with a header (ebscHeader) containing the table version and number of strikes. The header is followed by the bitmapScaleTable array.

A TTF font has a set of instructions and defines how something must be rendered. The itrp_InnerExecute function is the disassembler engine that will process glyph data and map the correct TrueType instructions.

TTF Font file fuzzing

The researchers developed a TTF Font fuzzer to fuzz different sizes. The fuzzer creates a font, installs it automatically in the C:\windows\fonts. Next, it will register a windows class & creates a window to automate the display of the font text in a range of sizes (using Windows API’s). Finally, it will remove the font.

Next, they explained the technical details behind the MS11-087 bug, highlighting that the font size must be set to 4 to trigger the vulnerability, and then demonstrated the vulnerability (Windows 7, with DEP, ASLR and UAC enabled, and logged in as a guest account) , using a kernel debugger switched to the csrss.exe context, and by using ring0 to ring3 shell code (using an empty loop where you can place any ring3 payload).

With the correct breakpoint set (to win32k!sfac_GetSbitBitmap, they used their fuzzer to prove that size 4 is indeed the one triggering the overflow.

.fon fonts

With regards to Microsoft Windows Bitmapped Font (.fon), they explain that these come in 2 types :

- New Executable (NE), which is the old format used in Windows 3

- Portable Executable (PE), used in Windows 95 and above

They continue with sharing some details on a .fon fuzzer, consisting of 2 scripts :

- mkwinfont.py (Simon Tatham), which creates NE .fon files

- fuzzer.py (Byoungyoung Lee), will fuzz the .fon in different width & heights.

They made 2 modifications to the scripts and reproduced the .fon file bug in MS11-077 (discovered by Byoungyoung Lee), triggering a BSOD (BAD_POOL_HEADER(19) or DRIVER_OVERRAN_STACK_BUFFER(f7)).

Based on their analysis, they state that it’s very difficult to bypass the safe unlinking protection in windows kernel pool. They went thru the various steps that lead to the overflow of 3 bytes of the next pool header and show that this particular bug has some important limitations in terms of what you can control, which makes it hard to exploit.

The additional bug they found (DRIVER_OVERRAN_STACK_BUFFER) gave them more control, but they didn’t research further options for bypassing the kernel canary because the issue was already being patched by Windows. The interesting thing about this bug is that you don’t need to open the font file – just a mouse hover is enough to trigger the issue.

This was a truly great, very technical & quite fast paced talk. If you plan on taking a malware reversing class somewhere, you may want to check out their training at Hack In Paris – these 2 definitely know their fu !!

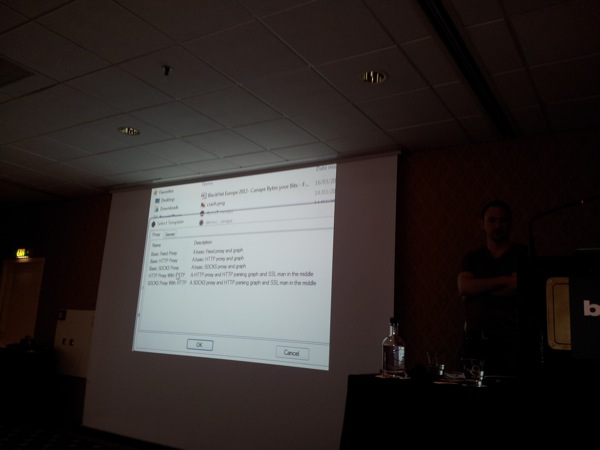

Canape – Bytes Your Bits

In the first talk after lunch (and probably also the last talk I’m going to cover this year – got a train to catch and stuff), James Forshaw and Michael Jordon (from Context) will introduce and release CANAPE, a tool they developed that aims at taking the existing paradigm of Web Application testing tools such as Burp, CAT or Fiddler and applies that to any network protocol.

Michael explains that the tool is a very generic tool to mitm traffic between two hosts and fuzz traffic. Using the tool, they will demonstrate finding and exploiting a bug in the Citrix ICA traffic. In other words, is a binary network application testing tool.

The core layer of the tool is a mitm engine.

The MITM functionality supports :

- Socks

- Port forwarding

- TCP/UDP/HTTP/Broadcast

- SSL

It also uses pipelines that allows you to use some built-in logic

They continue by briefly discussing the ICA protocol. In essence, ICA is a protocol used for Citrix XenApp and XenDesktop products. It’s used for remote desktop purposes and to run applications. The ICA protocol uses an ICA configuration file (which allows you to tell ICA to use socks, which is convenient for Canape :)

Next, the tool is demonstrated. They load a basic socks template, and start the proxy engine.

After configuring ICA to use Canape as socks proxy, the tool captures the traffic and displays it.

The ICA protocol is a stream bases protocol. It uses a single TCP stream and has 3 phases :

- Hello

- Negotiation

- Main stream (encryption, compression, multiplexing)

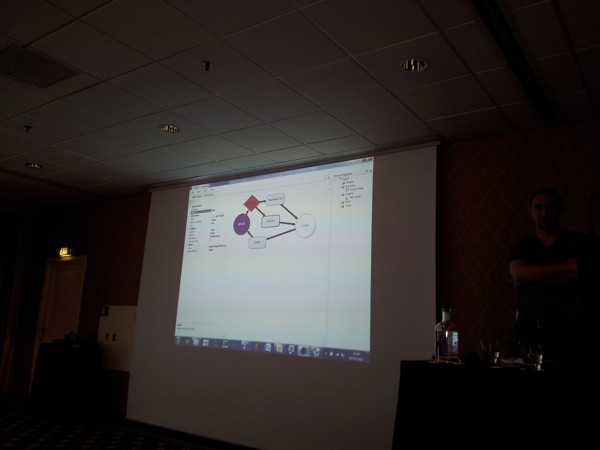

Using the previously capture data, a net graph can be used to represent states in the network traffic. By adding a decision node to the graph (which really is some kind of IF statement), and setting a filter to it (looking for the hello magic bytes)

By adding flows and setting a library, you can start breaking the initially captured flow down into the various phases or states. As a side note, from what I have seen, I hope the tool will come with an extensive manual because it looks quite overwhelming in terms of features and configurations steps & requirements.

James explains that the tool allows you to further document the entire protocol (including packets from the main stream) into the application. Using a parser, defining the various components in a single packet, they can further break down the stream/packets into fields (length, flags, data)

After breaking the stream further down, they explain that, since it’s encrypted traffic, they (ideally) would like to strip out the encryption as well. Based on the java implementation of ICA, they found the code that takes care of the encryption.

Canape allows you to run something outside of the tool (python, C#, ruby, visual basic, etc). James demonstrates a C# script, using XPath expressions to access the actual data and capture the key & decrypt it on the fly via a Dynamic node. Of course, they can add an encrypted as well (so they can decrypt, change, encrypt in a later phase)

After decrypting the traffic, they realized that the decrypted data is compressed. Looking at the java code, they noticed the compression code is quite complex, so instead of implementing the compression routine, they decided to use a certain flag in the session to downplay (and basically disable) compression, the equivalent of setting a registry key. Of course, setting the key is not what we want to do, because we don’t necessarily control the other side of the connection, so using the flag (replacing some bytes with other bytes) inside the connection would be more generic.

After decompressing, the traffic starts to look at lot better and shows username & passwords in cleartext – the best proof that the changes worked.

Next, they started looking at some of the other traffic, such as key presses. mouse movements, etc. So, after further documenting some of the sessions, you can use the fuzzer functionality in Canape to start changing traffic. From that point forward, all you need is some tool/script to run debugger + the ICA client and connect to the proxy which will fuzz traffic.

Time to demonstrate an older (now patched) bug in ICA. By controlling a value, used to get an offset in a list, which is then used to get another offset in another list, and then the resulting address is used as a function pointer, code execution would be possible.

In order to make it easier to find data you control, they distilled the required list of packets down to a small number and use the replay service in the tool to have more control over what traffic is sent exactly (instead of randomizing it all the time). After finding the bytes that seem to be important and telling an integer fuzzer to those bytes, they can further brute force the value to land somewhere in the heap (and perhaps use some kind of heap spray to control that location).

Citrix uses a static buffer for packets. If you send a small packet with a long length value (which doesn’t get checked), it’s possible to perform some kind of heap spray. There are a couple of other issues

- Block with 0’s : Since we control EAX (and contains a valid address), the instruction ADD BYTE [EAX],AL will work fine.

- Header bytes shouldn’t break the execution when it gets run as opcode.

Putting things together, all ingredients are available to build an exploit.

From an attack vector point of view, Michael explains, you can set up a website, get a client to download the ICA file which contains the target IP/port of your malicious exploit ICA server and exploit the client.

James continues with configuring the tool to set up an HTTP server, and create the necessary packets (flood) to perform the heap spray & deliver the payload.

FInally, they demonstrated a 0day BSOD in a modern version of Citrix Server. (demo only)

Great tool with lots of potential, might have a bit of a learning curve, but looks promising.

I found this Whitepaper that has a lot more info about Canape than what I could capture during the talk.

Check out http://canape.contextis.com – the tool is free, only runs on Windows and uses .Net Framework 4.

That’s it !

Just a few more words of gratitude before I go : Thanks to

- BlackHat for organizing and hosting a great conference again;

- old friends & new friends, for hanging out, having great and entertaining conversations;

- the weather gods, for giving us some fine days;

- you, for dropping by at www.corelan.be and reading this post.

At the same time, as a dad, I also would like to finally take the time to express my deepest condolences to the people who lost their kids or friends in the horrible bus accident earlier this week in Switzerland. Although my mind has been set to BlackHat mode for the last few days and I had the impression the news didn’t really fully hit the masses at Blackhat, it has certainly marked me and made me feel sad.

Bye for now, see y’all later !

© 2012 – 2021, Peter Van Eeckhoutte (corelanc0d3r). All rights reserved.